Comprehending the Digital Humanities

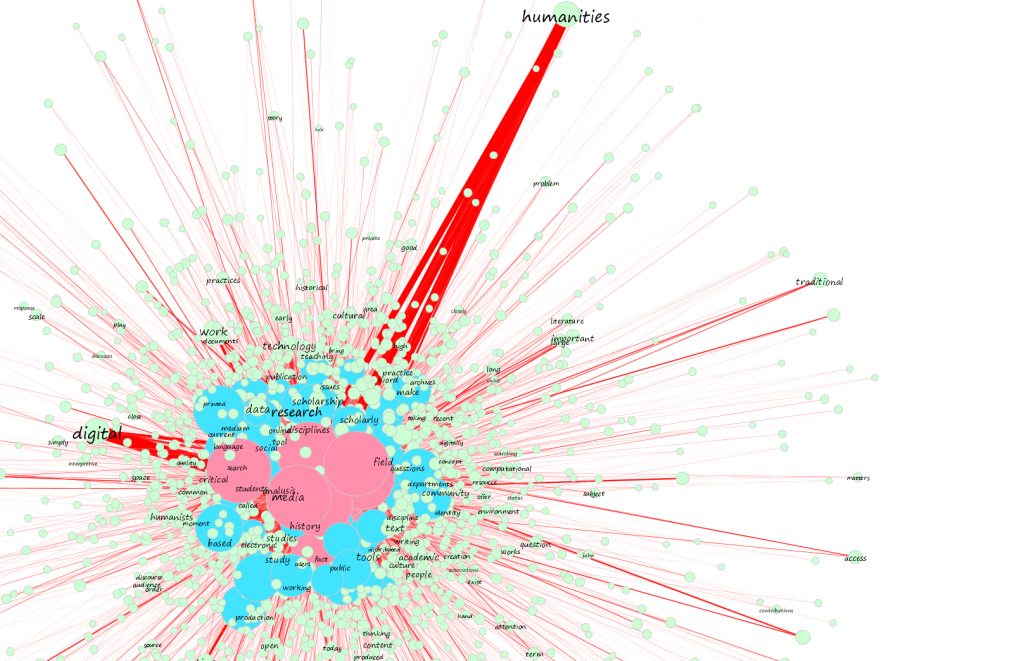

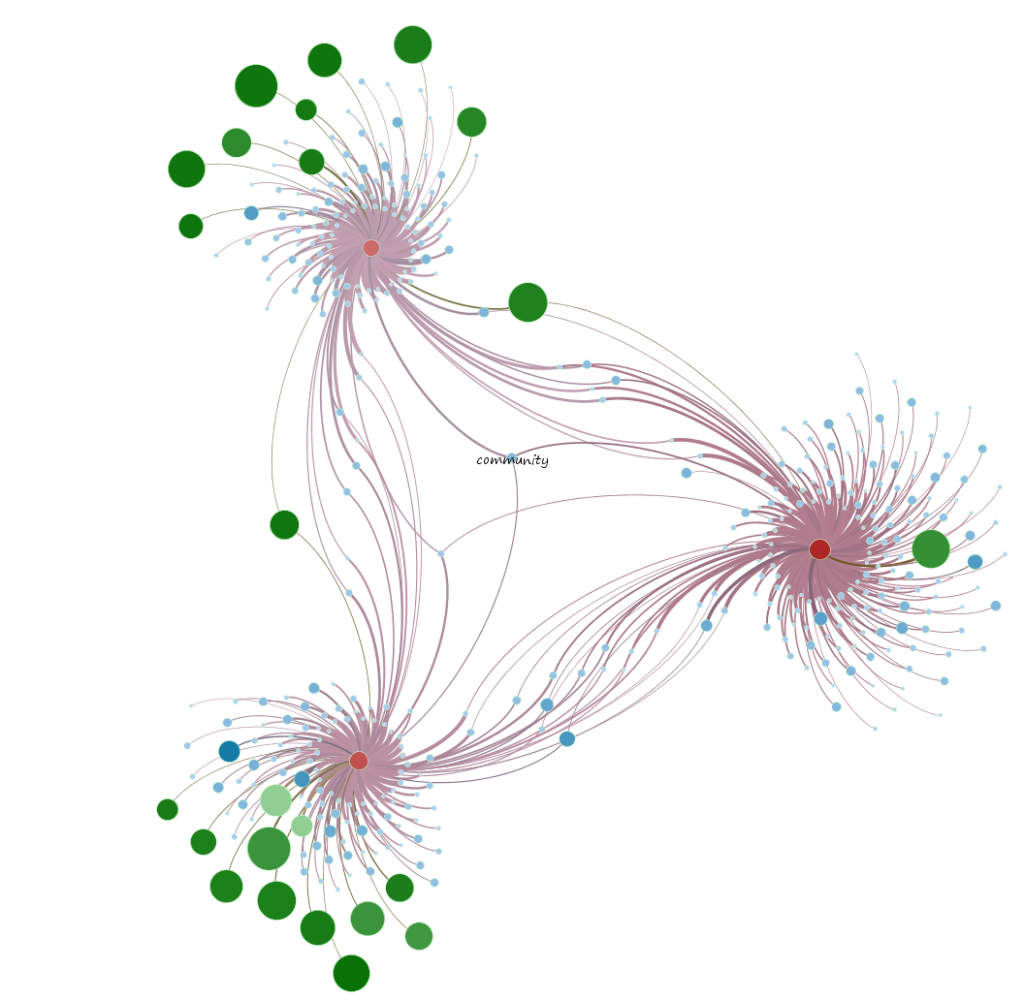

Topology

Topics

Documents

Data

Further Research

I decided to run this quick project after working a bit with the MALLET topic modeling kit from UMASS-Amherst. While I don’t have a background in text analysis, I found topic modeling proved extremely useful when integrated with other techniques I was more familiar with. The most interesting thing I noticed was that MALLET’s output seemed well suited for network representation. Just as with the DH@Stanford Graph, I felt that if I brought MALLET to bear on a small subject I was familiar with, it would help in my understanding the tool and technique. For my analysis, I chose a small corpus of the Digital Humanities sub-genre of “Humanities Computing / Digital Humanities definition”. Many members of the DH community have tried their hand at explicitly defining Humanities Computing or the Digital Humanities or, if not that, dealing with core issues related to that community as it identifies itself.

I found fifty such texts after a cursory search, followed by a few private requests as well as a quick note to the Humanist mailing list. They range in size and formality from blog posts to peer-reviewed articles and chapters. The authors are faculty, graduate students and staff in academia as well as industry professionals.

After identifying the texts of this corpus, I ran two drafts of analysis and interpretation of the MALLET end product using Gephi. For the final draft, I loaded the various texts into a MySQL database and cleaned them quite a bit, attempting to replace various words with their lemmas when it seemed applicable. While more sophisticated methods exist, such as part-of-speech tagging or identifying Language Action Types, I wanted this to be an exploration of these two tools and directed toward practical explanations of their method of use, and did not invest heavily on the corpus processing. Still, if I wish I’d been a bit more thorough, and noticed that “tweet” and “tweets” are treated as two separate words, among other errors.

I kept all words that had over 10 instances and any words under 10 instances that were connected to four or more topics. Topic to paper connections are defined as a percentage in the MALLET output, I parametrically created a connection strength for words to topics by dividing the number of that word in that topic by the total number of that word in the corpus (so that if there were 100 instances of “Google” and 13 of those instances were associated to Topic 5, then the Google-to-Topic 5 connection would have a strength or weight of .13). MALLET creates a word-weight output, but in this case I chose to forgo it in favor of the incident percentage.

MALLET will output the following formatted data with the “–output-doc-topics” option:

#doc source topic proportion ...

0 iucn\10004.txt 11 0.13 25 0.1 5 0.09 39 0.08 33 0.05 15 0.04 19 0.04 16 0.04

1 iucn\10005.txt 11 0.24 8 0.13 5 0.13 27 0.09 14 0.04 13 0.04 28 0.03 18 0.03

2 iucn\10006.txt 1 0.15 5 0.13 11 0.08 25 0.07 19 0.07 17 0.07 20 0.07 16 0.06

This indicates the association between a document (column 1 and 2) and a topic (Column 3) and its strength (Column 4). Columns 5 & 6, 7 & 8, 9 & 10, et cetera are progressively lower-ranked associations of that document with the listed topic. So, reading this, Document 0 (“10004.txt”) is associated with Topic 11 with a strength of .13, it’s also associated with Topic 25 with a strength of .1, Topic 5 with a strength of .09, Topic 39 with a strength of .08, &c &c. Please note that this particular output is not from this project (hence the existence of topic numbers >19). These are directly used as the links between topic and document.

MALLET will also output the following with the –word-topic-counts-file option:

0 open 16 45 11 32 4 28 17 4

1 minutes 1 4

2 video 17 27 5 2

3 viral 5 4

4 part 12 93 13 73 2 12 19 5 0 2

5 group 14 34 8 33 7 10 16 1

6 parodies 5 2

7 based 17 56 12 56 1 42 19 24 8 10

8 german 8 7 14 3 6 1

9 austrian 5 1

10 film 16 9 11 5 5 5

In this case, columns 0 and 1 indicate the word, while the next columns are Topic/Instantiation pairs for as many topics as contain instances of that word. Film has 9 instances in Topic 16, 5 instances in Topic 11 and 5 instances in Topic 5.

Transforming this into graph data produces the following:

SOURCE TARGET WEIGHT

`film` `Topic 16` `.47`

`Topic 16` `What Is Digital Humanities and What’s It Doing in English Departments?` `.23`

For the purpose of dealing with this as a Topic Network, I only dealt with topic-to-paper and word-to-topic connections of greater than 10% both for the purposes of manageability and because as Gephi currently exists, it does not take edge weight into account when analyzing communities and so many low-weight connections (known as edges in the parlance of network analysis) was reducing the statistical significance of the identified topic modules.

I present the results in the Topology page, with many visualizations and not too many explanations. It wasn’t my intent to demonstrate that such-and-such’s blog post was more central to so-and-so’s paper, and given the small sample size, the rough preparation and quick implementation, I think of this as an attempt to draw attention to topic network analysis and treatment of topics, words and texts as topologies. Hopefully, you find this as useful and enjoyable as I did.

Elijah,

This is great work! I’ll be sharing it with my digital humanities class at Illinois: it provides a very nice way to see what people are talking about in the selected articles. I take it from the write-up above that this selection was itself based on a topic (defining digital humanities) and recommendations from the community: that seems like a perfectly reasonable way to create the corpus for a first pass at the problem, and the issues you identify as candidates for “Further Research” (in that section of this site) are all interesting. Do you think there are further research questions around the selection of articles itself? What did you think were the limitations of the method of selection you used, and what would be some alternate methods? This would, by the way, make a great poster…either the conference kind or the CafePress kind.

Thanks, John, I’m glad you found it interesting.

Identifying texts was much more difficult than I would have thought, partly because of the contentious nature of the subject. Along with general searches, recommendations and personal knowledge, I also followed citation networks (whether scholarly or through tweeting, Wikipedia reference or blog link). At the end, I also wanted to make sure and add representative works of scholars who had been referenced often but for whom I couldn’t find a vanilla “Defining the Digital Humanities” paper. I think a discerning eye could make them out, as well as tell that they actually seemed to fit right in.

As to posters, well, these are all SVG, and would scale to any size. We could craft one into a 500′ banner for DH11…

Great work! I find it particularly interesting how you connect the network of terms / documents and topics together in order to show their relationships and the communities they form. I’d like to see what happens if you apply it in other contexts, for example, sampling the coverage of a certain topic in different newspapers or let’s say Wikileaks cables etc.

I know you already saw this, but maybe the others will be interested in my article on a similar subject: http://deemeetree.com/current/text-network-analysis/ (note the Flash navigator on the left side of this page that we developed – basically it attempts to do a similar thing – grouping various pages on the basis of their belonging to the same context / cluster).

Dmitry

I follow your posts with attention: great stuff!

Clement