One of the projects I’m supporting this year is an analysis of neighborhood similarity of cities in the United States. The similarity measure is based off a set of attributes and can be represented as a matrix, which can then be represented as a graph or network. Once in network form, it can easily be visualized and network community detection can take place, allowing for compression of a network into more a more manageable form if the community detection reveals a strong signal. Visually, that means a 3000×3000 matrix can be made to look like this:

The nodes are colored by module, which is to say that they indicate particular regions within the network where links within that region are more likely than links between that region and other regions. The modularity signal is a measure of that. In this case, we have a network with .785 modularity, meaning only 21.5% of links are between networks. But any such graph representation runs into the problem typical of graphs where the position of a node (or even of entire groups of nodes) can place it spatially close to nodes from which it is very distant on the network. This spatial illusion damages the credibility of network visualization because we presume that objects shown next to each other on a two-dimensional image are close to each other. In a network, though, objects that are close to each other may be close because they are being pulled toward connected nodes that are in vastly different parts of the graph.

The nodes are colored by module, which is to say that they indicate particular regions within the network where links within that region are more likely than links between that region and other regions. The modularity signal is a measure of that. In this case, we have a network with .785 modularity, meaning only 21.5% of links are between networks. But any such graph representation runs into the problem typical of graphs where the position of a node (or even of entire groups of nodes) can place it spatially close to nodes from which it is very distant on the network. This spatial illusion damages the credibility of network visualization because we presume that objects shown next to each other on a two-dimensional image are close to each other. In a network, though, objects that are close to each other may be close because they are being pulled toward connected nodes that are in vastly different parts of the graph.

This creates an illusion of similarity that is difficult to adequately explain to an audience. Fortunately, when we have the chance to compress large quantities of data, we can provide some better explanation of the structures at work in the creation of a network visualization of this kind. In this case, our strong community signal allows us to compress ~3000 nodes into just 13. By providing the combined strength of connection between those nodes, which represents the connection across communities that makes up the above-referenced 21.5% of the connections in this network, and sizing the nodes based on their share of those connections, we get a better understanding of how much of the structure of the more complex network is illusory.

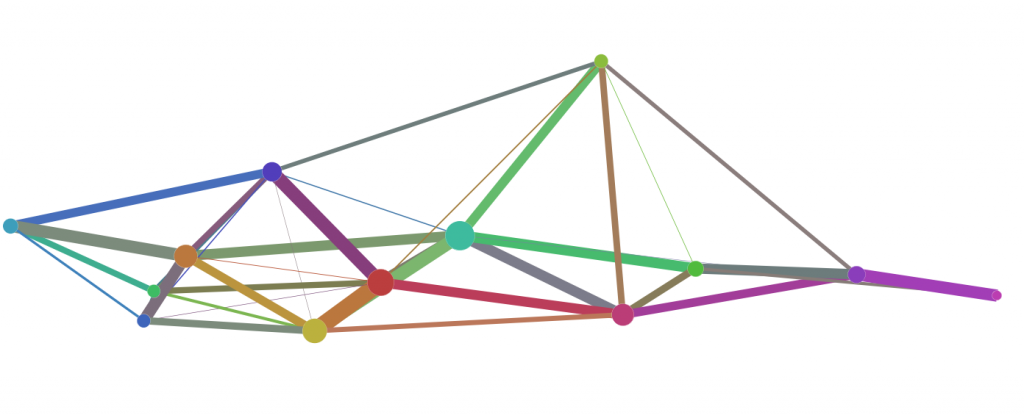

The above image is the same network, though compressed into community meta-nodes. It can act as an inset map of sorts, providing the reader with the capacity to better understand the regionation of spatial inaccuracy of the network in a visual manner. I didn’t want to move the nodes, because I wanted to leave this process as straightforward as possible, but obviously there is some slight adjustment that should be made so that the connections between communities are not obscured. It is in these cases where curved edges, which bother many people, prove extremely useful.

The above image is the same network, though compressed into community meta-nodes. It can act as an inset map of sorts, providing the reader with the capacity to better understand the regionation of spatial inaccuracy of the network in a visual manner. I didn’t want to move the nodes, because I wanted to leave this process as straightforward as possible, but obviously there is some slight adjustment that should be made so that the connections between communities are not obscured. It is in these cases where curved edges, which bother many people, prove extremely useful.

The resulting image, combined with the modularity score of the network, provides what I consider to be strong evidence for the validity of the network visualization. While in some cases communities are pulled near communities with which they hold little similarity, the overall structural patterns are distinct and the chains of similarity from community to community to community reinforce the apparent structure of the individual nodes in the more complex network visualization.

The resulting image, combined with the modularity score of the network, provides what I consider to be strong evidence for the validity of the network visualization. While in some cases communities are pulled near communities with which they hold little similarity, the overall structural patterns are distinct and the chains of similarity from community to community to community reinforce the apparent structure of the individual nodes in the more complex network visualization.

That structure would provide a researcher with a useful scholarly path to explore, if it weren’t for the fact that this network is invalid. It turns out the method for determining the similarity between the neighborhoods needs to be retooled. The final network may look just like this, or it may not look anything like this. With that in mind, I find it particularly interesting that it makes no difference in the usefulness of this network from a methodological perspective.

Exploratory analysis is typically construed to mean exploration of the material for its content as it applies to a research agenda, but much of what is done under the auspices of the digital humanities is exploration of the methods (either theoretically or enshrined in tools) for their suitability to a research area. It seems healthier to think one can sample and experiment with a variety of methods, and find methodological successes even when forced to retreat from claims that advance a particular research question. Or it could be that my position in supporting research allows me to value the methodological component higher than the traditional research agendas.

Either way, the use of inset maps that show a compressed version of a network, when there are valid methods available of compressing such networks, is a useful method in network cartography.

Elijah: would you write more about the city-similarity project? I’ve been working on my own version of neighborhood similarity in Gephi and elsewhere, exclusively using migration data [http://www.irs.gov/uac/SOI-Tax-Stats---Free-Migration-Data-Downloads]. Where can I find more information about the project you’re associated with?

Dan, we haven’t published any results yet on that project, and don’t expect to until February. But when we do, I’ll be sure to make note of it.

It seems to me that there is a limit to the use of visualizations for analytical purposes. Just because a dataset can be easily visualized doesn’t mean it should be. While visualizations can help non-techy folks handle large datasets, there are immense (and often very technical) caveats about the biases and limitations inherent in the object for analysis. I think the assumption in a visualization is that the image is there to communicate something when this isn’t always the case. Visualizations can be an extremely powerful tool, I just hope we as researchers and information professionals keep a strong, clear line between the process of analysis and potential (or malicious) misdirection for our audiences. Take this lesson from climate science!